The keynote lecture at this year’s CSAE conference was given by Macartan Humphreys from Columbia Univsersity. Titled ‘Researchers just ran a randomized control trial in Africa and you won’t believe what they found: Reflections on evidence in the age of fake news and discredited expertise‘ the lecture set out to be entertaining and topical at the same time. Lukas Hensel, DPhil student at CSAE, discusses the main takeaway messages of the keynote.

Building on his own experiences, Professor Humphreys began by pointedly describing how publication bias affects what kind of evidence receives most attention. He pointedly described the trade-off between more interesting and more rigorous science. This was followed by a warning the audience of the dangers of a system that rewards interesting and counter-intuitive results while discounting the reliability or replicability of scientific studies during the peer-review process.

Professor Humphreys mentioned several threats to the credibility of scientific evidence in the public sphere. He talked about external validity, communication of research findings and the competition for attention, and causal complexity. He also suggested several potential solutions such as structural estimation, mechanism experiments and pre-registered meta studies, each of them with potential drawbacks and advantages. Concluding he emphasized the importance of coordinated research agendas to ensure comparability and, hence, reliability of evidence produced by social science. It is impossible to summarize the multitude of great insights and arguments by Professor Humphreys in the scope of a blog entry, so I encourage you to watch the lecture yourself (you can watch it again at here).

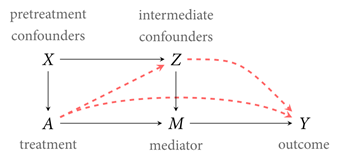

Instead I would like to elaborate on the issue of causal complexity mentioned by professor Humphreys. He went into some depth on the role of mediating variable for causal inference and I was curious what we can learn from political scientists in this area. They seem to have thought about this problem quite a bit, but my impression is that economists do not usually think of mediating variables in the same way. The figure below is taken from Acharya et al. (2016) and depicts conceptual framework of how mediating variables interact with the treatment effect.

The intermediate confounders Z is not observed and are the threat to identification in an experimental context. The pretreatment confounders X are only important for non-experimental studies. This framework allows a distinction direct treatment effect (red-dashed line) and an indirect effect through the mediator. Thinking within this framework could help experimental development economists to better understand treatment effects and heterogeneity in cases when the treatment is sufficiently complex to have multiple potential mechanisms, but the data is not rich enough to allow structural estimation. With assumptions about the relationship between treatment and mediator and the role of intermediate confounders, statistical testing of the hypothesis that the treatment effect is exclusively mediated to the mediator is possible (without those assumption identification is impossible as emphasized by Professor Humphreys). I am not sure how useful this test is in practice, but I think it can be an interesting addition to our tool-kit. If you have any experience with this, I would be very curious to hear about it.

Acharya, A., Blackwell, M., & Sen, M. (2016). Explaining Causal Findings Without Bias: Detecting and Assessing Direct Effects. American Political Science Review, 110(3), 512–529.

The video stream of the keynote session can be found on our video and livestreams page.

This blog post was written by Lukas Hensel, a PhD student working behavioural labour economics in developing countries at CSAE and the Department of Economics at the University of Oxford. You can follow him on Twitter via @Luthor113.